TELE Greenland said in a statement that it has ruled out Huawei as a supplier for a future 5G rollout. Currently, the carrier has some Huawei gear in its network, although Ericsson is the principal supplier of its 4G network. TELE Greenland is able to provide mobile broadband to 92% of the population.

The population of Greenland is approximately 56,000.

https://telepost.gl/da/nyheder/tele-greenland-as-om-4g-mobile-first-og-5g-i-groenland

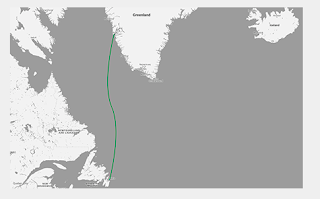

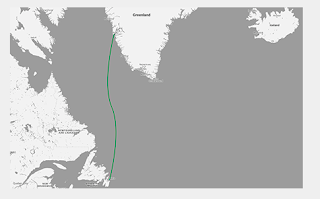

Following repairs to a subsea cable linking Greenland and Canada, connectivity has been restored to customers north of the town of Sisimiut.

Following repairs to a subsea cable linking Greenland and Canada, connectivity has been restored to customers north of the town of Sisimiut.

The population of South Greenland will have to wait for another 15-20 days before the southern branch of the damaged subsea cable is repaired.

Greenland is an autonomous territory of Denmark with a population of about 56,000, most of whom live along fjords in the southwest.

TELE-POST is the service provider for Greenland.

The subsea cable to Canada was breached in late December 2018. The weather has been a factor in scheduling the repair ship. Since then, an old microwave radio network has been providing service via a second subsea cable to Iceland.

https://telepost.info/da

The population of Greenland is approximately 56,000.

https://telepost.gl/da/nyheder/tele-greenland-as-om-4g-mobile-first-og-5g-i-groenland

Subsea connectivity restored to northern Greenland

Following repairs to a subsea cable linking Greenland and Canada, connectivity has been restored to customers north of the town of Sisimiut.

Following repairs to a subsea cable linking Greenland and Canada, connectivity has been restored to customers north of the town of Sisimiut.The population of South Greenland will have to wait for another 15-20 days before the southern branch of the damaged subsea cable is repaired.

Greenland is an autonomous territory of Denmark with a population of about 56,000, most of whom live along fjords in the southwest.

TELE-POST is the service provider for Greenland.

The subsea cable to Canada was breached in late December 2018. The weather has been a factor in scheduling the repair ship. Since then, an old microwave radio network has been providing service via a second subsea cable to Iceland.

https://telepost.info/da