Intel introduced its "Nervana" platform and outlined its broad for artificial intelligence (AI), encompassing a range of new products, technologies and investments from the edge to the data center.

Intel currently powers 97 percent of data center servers running AI workloads on its existing Intel Xeon processors and Intel Xeon Phi processors, along with more workload-optimized accelerators, including FPGAs (field-programmable gate arrays).

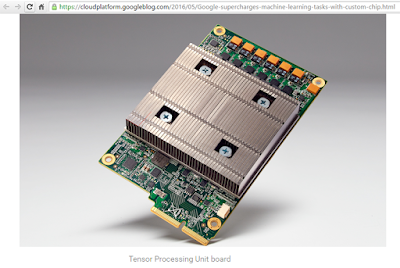

Intel said the breakthrough technology acquired from Nervana earlier this summer will be integrated into its product roadmap. Intel will test first silicon (code-named “Lake Crest”) in the first half of 2017 and will make it available to key customers later in the year. In addition, Intel announced a new product (code-named “Knights Crest”) on the roadmap that tightly integrates best-in-class Intel Xeon processors with the technology from Nervana. Lake Crest is optimized specifically for neural networks to deliver the highest performance for deep learning and offers unprecedented compute density with a high-bandwidth interconnect.

“We expect the Intel Nervana platform to produce breakthrough performance and dramatic reductions in the time to train complex neural networks,” said Diane Bryant, executive vice president and general manager of the Data Center Group at Intel. “Before the end of the decade, Intel will deliver a 100-fold increase in performance that will turbocharge the pace of innovation in the emerging deep learning space.”

Bryant also announced that Intel expects the next generation of Intel Xeon Phi processors (code-named “Knights Mill”) will deliver up to 4x better performance1 than the previous generation for deep learning and will be available in 2017.

In addition, Intel announced it is shipping a preliminary version of the next generation of Intel Xeon processors (code-named “Skylake”) to select cloud service providers. With AVX-512, an integrated acceleration advancement, these Intel Xeon processors will significantly boost the performance of inference for machine learning workloads. Additional capabilities and configurations will be available when the platform family launches in mid-2017 to meet the full breadth of customer segments and requirements.

Intel also highlighted its Saffron Technology, which leverages memory-based reasoning techniques and transparent analysis of heterogeneous data.

https://newsroom.intel.com/editorials/krzanich-ai-day/

Intel currently powers 97 percent of data center servers running AI workloads on its existing Intel Xeon processors and Intel Xeon Phi processors, along with more workload-optimized accelerators, including FPGAs (field-programmable gate arrays).

Intel said the breakthrough technology acquired from Nervana earlier this summer will be integrated into its product roadmap. Intel will test first silicon (code-named “Lake Crest”) in the first half of 2017 and will make it available to key customers later in the year. In addition, Intel announced a new product (code-named “Knights Crest”) on the roadmap that tightly integrates best-in-class Intel Xeon processors with the technology from Nervana. Lake Crest is optimized specifically for neural networks to deliver the highest performance for deep learning and offers unprecedented compute density with a high-bandwidth interconnect.

“We expect the Intel Nervana platform to produce breakthrough performance and dramatic reductions in the time to train complex neural networks,” said Diane Bryant, executive vice president and general manager of the Data Center Group at Intel. “Before the end of the decade, Intel will deliver a 100-fold increase in performance that will turbocharge the pace of innovation in the emerging deep learning space.”

Bryant also announced that Intel expects the next generation of Intel Xeon Phi processors (code-named “Knights Mill”) will deliver up to 4x better performance1 than the previous generation for deep learning and will be available in 2017.

In addition, Intel announced it is shipping a preliminary version of the next generation of Intel Xeon processors (code-named “Skylake”) to select cloud service providers. With AVX-512, an integrated acceleration advancement, these Intel Xeon processors will significantly boost the performance of inference for machine learning workloads. Additional capabilities and configurations will be available when the platform family launches in mid-2017 to meet the full breadth of customer segments and requirements.

Intel also highlighted its Saffron Technology, which leverages memory-based reasoning techniques and transparent analysis of heterogeneous data.

https://newsroom.intel.com/editorials/krzanich-ai-day/

Intel to Acquire Nervana for AI

Intel agreed to acquire Nervana Systems, a start-up based in San Diego, California, for their work in machine learning. Financial terms were not disclosed.

Nervana, which was founded in 2014, developed a software and hardware stack for deep learning.

Intel said the Nervana technology would help optimize the Intel Math Kernel Library and its integration into industry standard frameworks, advancing the deep learning performance and TCO of the Intel Xeon and Intel Xeon Phi processors.

http://www.intel.com

Nervana, which was founded in 2014, developed a software and hardware stack for deep learning.

Intel said the Nervana technology would help optimize the Intel Math Kernel Library and its integration into industry standard frameworks, advancing the deep learning performance and TCO of the Intel Xeon and Intel Xeon Phi processors.

http://www.intel.com