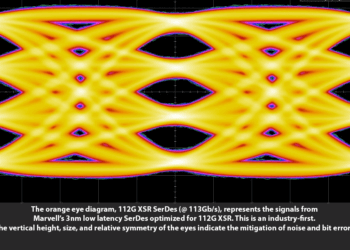

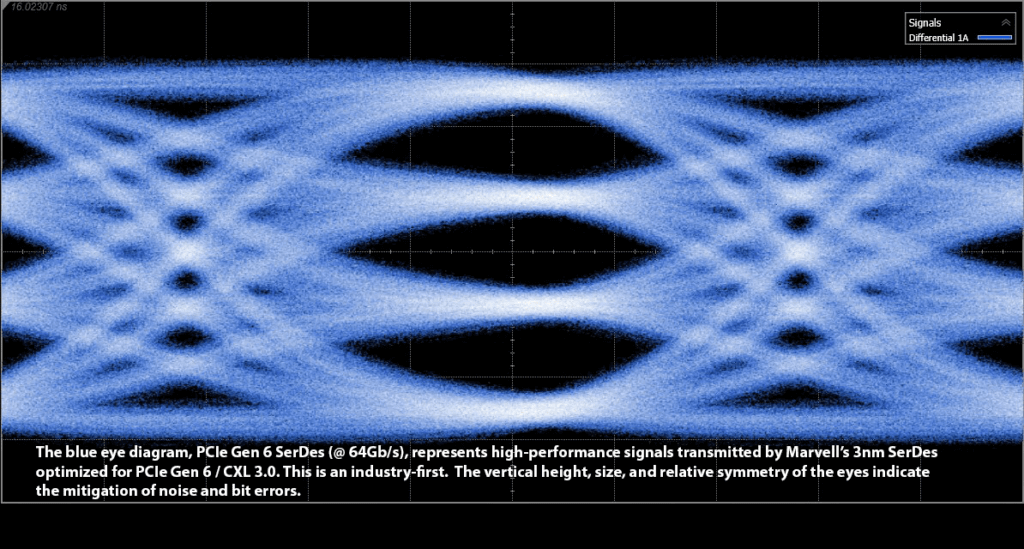

Marvell demonstrated high-speed, ultra-high bandwidth silicon interconnects produced on TSMC's 3-nanometer (3nm) process. This includes Marvell's 112G XSR SerDes (serializer/de-serializer), Long Reach SerDes, PCIe Gen 6 / CXL 3.0 SerDes, and a 240 Tbps parallel die-to-die interconnect.

SerDes and parallel interconnects serve as high-speed pathways for exchanging data between chips or silicon components inside chiplets. Together with 2.5D and 3D packaging, these technologies will eliminate system-level bottlenecks to advance the most complex semiconductor designs. SerDes also help reduce pins, traces and circuit board space to reduce cost. A rack in a hyperscale data center might contain tens of thousands of SerDes links.

The new parallel die-to-die interconnect, for example, enables aggregate data transfers up to 240 Tbps, 45% faster than available alternatives for multichip packaging applications.

Marvell incorporates its SerDes and interconnect technologies into its flagship silicon solutions including Teralynx switches, PAM4 and coherent DSPs, Alaska Ethernet physical layer (PHY) devices, OCTEON processors, Bravera storage controllers, Brightlane™ automotive Ethernet chipsets, and custom ASICs. Moving to a 3nm process enables engineers to lower the cost and power consumption of chips and computing systems while maintaining signal integrity and performance.

"Interconnects are taking on heightened importance as clouds and other computing systems grow in size, complexity and capability. Our advanced SerDes and parallel interfaces will play a significant role in providing a platform for developing chips with best-in-class bandwidth, latency, bit error rate, and power efficiency for meeting the demands of AI and other complex workloads," said Raghib Hussain, president of products and technologies at Marvell. "We are proud to be able to deliver such advances on TSMC's 3nm technology and take semiconductor designs to the next level for our customers around the world."

- Marvell was also the first data infrastructure silicon supplier to respectively sample and commercially release the 112G SerDes and has been a leader in data infrastructure products based on TSMC's 5nm process.

https://www.marvell.com/company/newsroom/marvell-demonstrates-industrys-first-3nm-data-infrastructure-silicon.html