Google unveiled its next-generation A3 GPU VMs designed for training and serving the most demanding generative AI and large language model applications.

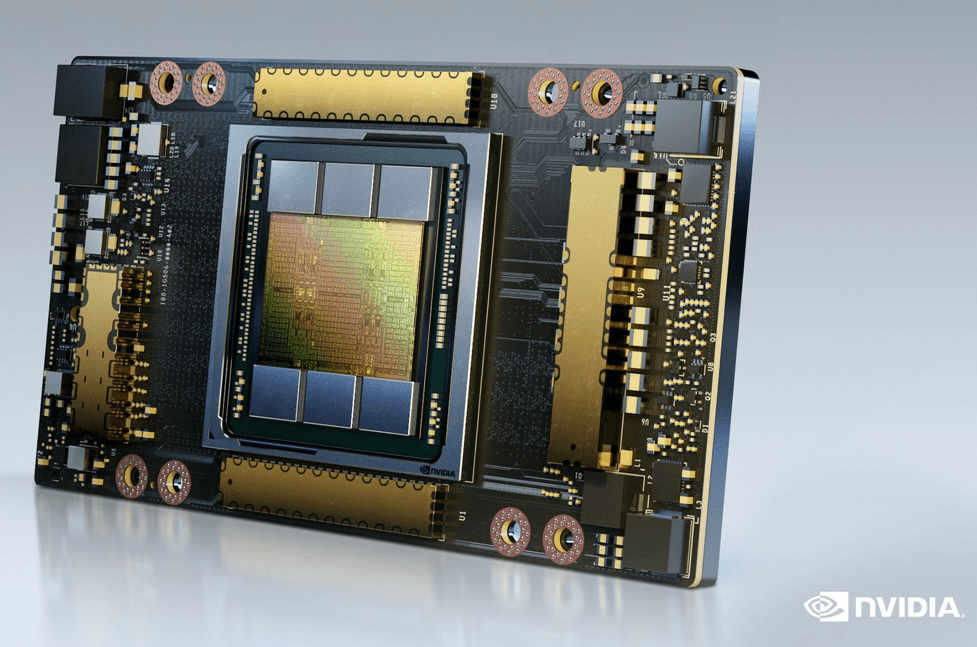

Google Compute Engine A3 VMs combine NVIDIA H100 Tensor Core GPUs and Google’s custom-designed 200 Gbps IPUs. The design leverages GPU-to-GPU data transfers bypassing the CPU host and flowing over separate interfaces from other VM networks and data traffic. Google says this innovation enables up to 10x more network bandwidth compared to our A2 VMs, with low tail latencies and high bandwidth stability.

Google also notes that its Jupiter data center networking fabric scales to tens of thousands of highly interconnected GPUs and allows for full-bandwidth reconfigurable optical links that can adjust the topology on demand.Highlights of the A3:

- 8 H100 GPUs utilizing NVIDIA’s Hopper architecture, delivering 3x compute throughput

- 3.6 TB/s bisectional bandwidth between A3’s 8 GPUs via NVIDIA NVSwitch and NVLink 4.0

- Next-generation 4th Gen Intel Xeon Scalable processors

- 2TB of host memory via 4800 MHz DDR5 DIMMs

- 10x greater networking bandwidth powered by our hardware-enabled IPUs, specialized inter-server GPU communication stack and NCCL optimizations

“Google Cloud's A3 VMs, powered by next-generation NVIDIA H100 GPUs, will accelerate training and serving of generative AI applications,” said Ian Buck, vice president of hyperscale and high performance computing at NVIDIA. “On the heels of Google Cloud’s recently launched G2 instances, we're proud to continue our work with Google Cloud to help transform enterprises around the world with purpose-built AI infrastructure.”

https://cloud.google.com/blog/products/compute/introducing-a3-supercomputers-with-nvidia-h100-gpus