AI is driving Google to rethink all its products and services, said Sundar Pichai, Google CEO, at the company's Google IO event in Mountain View, California. Big strides have recently pushed AI to surpass human vision in terms of real world image recognition, while speech recognition is now widely deployed in many smartphone applications to provide a better input interface for users. However, it is one thing for AI to win at chess or a game of Go, but it is a significantly greater task to get AI to work at scale. Everything at Google is big, and here are some recent numbers:

- Seven services, now with over a billion monthly users: Search, Android, Chrome, YouTube, Maps, Play, Gmail

- Users on YouTube watch over a billion hours of video per day.

- Every day, Google Maps helps users navigate 1 billion km.

- Google Drive now has over 800 million active users; every week 3 billion objects are uploaded to Google Drive.

- Google Photos has over 500 million active users, with over 1.2 billion photos are uploaded.

- Android is now running on over 2 billion active devices.

Google is already pushing AI into many of these applications. Examples of this include Google Maps, which is now using machine learning to identify street signs and storefronts. YouTube is applying AI for generating better suggestions on what to watch next. Google Photos is using machine learning to deliver better search results and Android has smarter predictive typing.

Google is already pushing AI into many of these applications. Examples of this include Google Maps, which is now using machine learning to identify street signs and storefronts. YouTube is applying AI for generating better suggestions on what to watch next. Google Photos is using machine learning to deliver better search results and Android has smarter predictive typing. Another way that AI is entering the user experience is by using voice as an input for many products. Pichai said the improvement in voice recognition over the past year have been amazing. For instance, its Google Home speaker uses only two onboard microphones to assess the direction and to identify the use in order to better serve customers. The company also claims that its image recognition algorithms have now surpassed humans (although it is not clear what the metrics are). Another one of the big announcements at this year's Google IO event concerned Google Lens, a new in-app capability to layer augmented reality on top of images and videos. The company demo'ed taking a photo of a flower and then asking Google to identify it.

Another way that AI is entering the user experience is by using voice as an input for many products. Pichai said the improvement in voice recognition over the past year have been amazing. For instance, its Google Home speaker uses only two onboard microphones to assess the direction and to identify the use in order to better serve customers. The company also claims that its image recognition algorithms have now surpassed humans (although it is not clear what the metrics are). Another one of the big announcements at this year's Google IO event concerned Google Lens, a new in-app capability to layer augmented reality on top of images and videos. The company demo'ed taking a photo of a flower and then asking Google to identify it. Data centres will have to support the enormous processing challenges of an AI-first world and the network will have to deliver the I/O bandwidth for users to the data centres and for connecting specialised AI servers within and between Google data centres. Pichai said this involves nothing less than rearchitecting the data centre for AI.

Data centres will have to support the enormous processing challenges of an AI-first world and the network will have to deliver the I/O bandwidth for users to the data centres and for connecting specialised AI servers within and between Google data centres. Pichai said this involves nothing less than rearchitecting the data centre for AI.

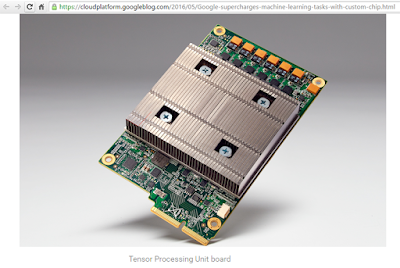

Google's AI-first data centres will be packed with Tensor processing units (TPUs) for machine learning. TPUs, which Google launched last year, are custom ASICs that are about 30-50 times faster and more power efficient than general purpose CPUs or GPUs for certain machine learning functions. TPUs are tailored for TensorFlow, an open source software library for machine learning that was developed at Google. TensorFlow was originally developed by the Google Brain team and released under the Apache 2.0 open source license in November 2015. At the time, Google said TensorFlow would be able to run on multiple CPUs and GPUs. Parenthetically, it should also be noted that in November 2016 Intel and Google announced a strategic alliance to help enterprise IT deliver an open, flexible and secure multi-cloud infrastructure for their businesses. One area of focus is the optimisation of the open source TensorFlowOpens in a new window library to benefit from the performance of Intel architecture. The companies said their joint work will provide software developers an optimised machine learning library to drive the next wave of AI innovation across a range of models including convolutional and recurrent neural networks.

A quick note about the technology: Google describes TensorFlow as an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them.

A quick note about the technology: Google describes TensorFlow as an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them.

Here come TPUs on the Google Compute Engine

At its recent I/O event, Google introduced the next generation of Cloud TPUs, which are optimised for both neural network training and inference on large data sets. TPUs are stacked onto boards, and each board has four TPUs, each of which is capable of 180 trillion floating point operations per second. Boards are then stacked into pods, each capable of 11.5 petaflops. This is an important advance for data centre infrastructure design for the AI era, said Pichai, and it is already being used within the Google Compute Engine service. This means that the same racks of TPU hardware can now be purchased online like any of the other Google cloud services.

There is an interesting photo that Sundar Pinchai shared with the audience, taken inside a Google data centre, which shows four racks of equipment packed with TensorFlow TPU pods. Bundles of fibre pair linking between the four racks can be seen. Apparently, there is no top-of-rack switch, but that is not certain. It will be interesting to know whether these new designs will soon fill a meaningful portion of the data centre floor.