Cloud Tensor Processing Units (TPUs) are now available in beta on the Google Cloud Platform (GCP).

The Cloud TPUs are a family of Google-designed hardware accelerators that are optimized to speed up and scale up specific machine learning (ML) workloads programmed with TensorFlow.

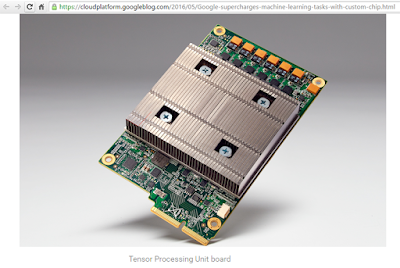

Google's TPUs are built with four custom ASICs. Each Cloud TPU packs up to 180 teraflops of floating-point performance and 64 GB of high-bandwidth memory onto a single board. The boards can be connected via an ultra-fast network to form multi-petaflop ML supercomputers. These "TPU pods" will be available on GCP later this year.

Google's TPUs are built with four custom ASICs. Each Cloud TPU packs up to 180 teraflops of floating-point performance and 64 GB of high-bandwidth memory onto a single board. The boards can be connected via an ultra-fast network to form multi-petaflop ML supercomputers. These "TPU pods" will be available on GCP later this year.

The Cloud TPUs can be programmed with high-level, open source TensorFlow APIs. GCP is making a number of reference Cloud TPU model implementations available, including:

- ResNet-50 and other popular models for image classification

- Transformer for machine translation and language modeling

- RetinaNet for object detection

Google adds that its cloud TPUs can also simplify the planning and management of ML computing resources. By purchasing the service, users benefit from the large-scale, tightly-integrated ML infrastructure that has been heavily optimized at Google over many years. The cloud TPUs are protected by the security mechanisms and practices that safeguard all Google Cloud services.

Google Builds a Custom ASIC for Machine Learning

The Tensor Processing Unit (TPU) is tailored for TensorFlow, which is an open source software library for machine learning that was developed at Google.

The Tensor Processing Unit (TPU) is tailored for TensorFlow, which is an open source software library for machine learning that was developed at Google.In a blog posting, Norm Jouppi, Distinguished Hardware Engineer at Google, discloses that the TPUs have already been in deployment in Google data centers for over a year, where they "deliver an order of magnitude better-optimized performance per watt for machine learning." The stealthy project to develop in-house silicon has been underway for several years.

A number of Google applications are already running on the Tensor Processing Units, including RankBrain, Street View, and the AlphaGo application that recently defeated the Go world champion, Lee Sedol.

A number of Google applications are already running on the Tensor Processing Units, including RankBrain, Street View, and the AlphaGo application that recently defeated the Go world champion, Lee Sedol.Google plans to deliver machine learning as a service on its Google Cloud Platform by providing APIs for computer vision, speech, human language translation, etc.

https://cloudplatform.googleblog.com/2016/05/Google-supercharges-machine-learning-tasks-with-custom-chip.html

- TensorFlow was originally developed by the Google Brain team and released under the Apache 2.0 open source license in November 2015. At the time, Google said TensorFlow can run on multiple CPUs and GPUs.